Research Update

Tyler Becker

Regret in not playing pure strategy \(\sigma'\), having instead played \(\sigma^t\)

Regret Matching

Decompose joint strategy \(\sigma\) into \(\sigma_i, \sigma_{-i} \)

Regret Matching

Finding Unexploitable Strategies

Finding maximally exploitative strategy with static opponent

Converging to unexploitable strategy via self-play

1

2

...

...

...

...

...

...

...

\(N\)

Ability to solve arbitrarily sized matrix games efficiently

\(\mathcal{A} = \mathbb{R}^{N\times N}\)

Static Opponent Strategy

Non-uniform Equilibria

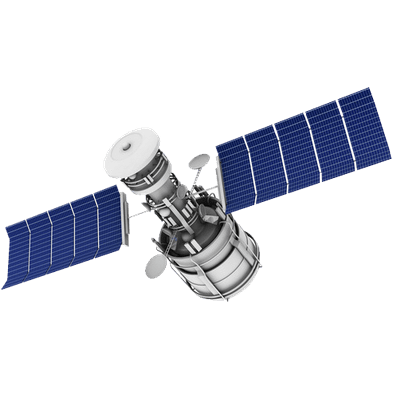

Extensive Form Games

- Reasoning about action histories

R

P

S

Player 1

Player 2

R

P

S

R

P

S

R

P

S

R

P

S

Player 1

Player 2

(0,0)

(0,0)

(0,0)

(-1,1)

(-1,1)

(-1,1)

(1,-1)

(1,-1)

(1,-1)

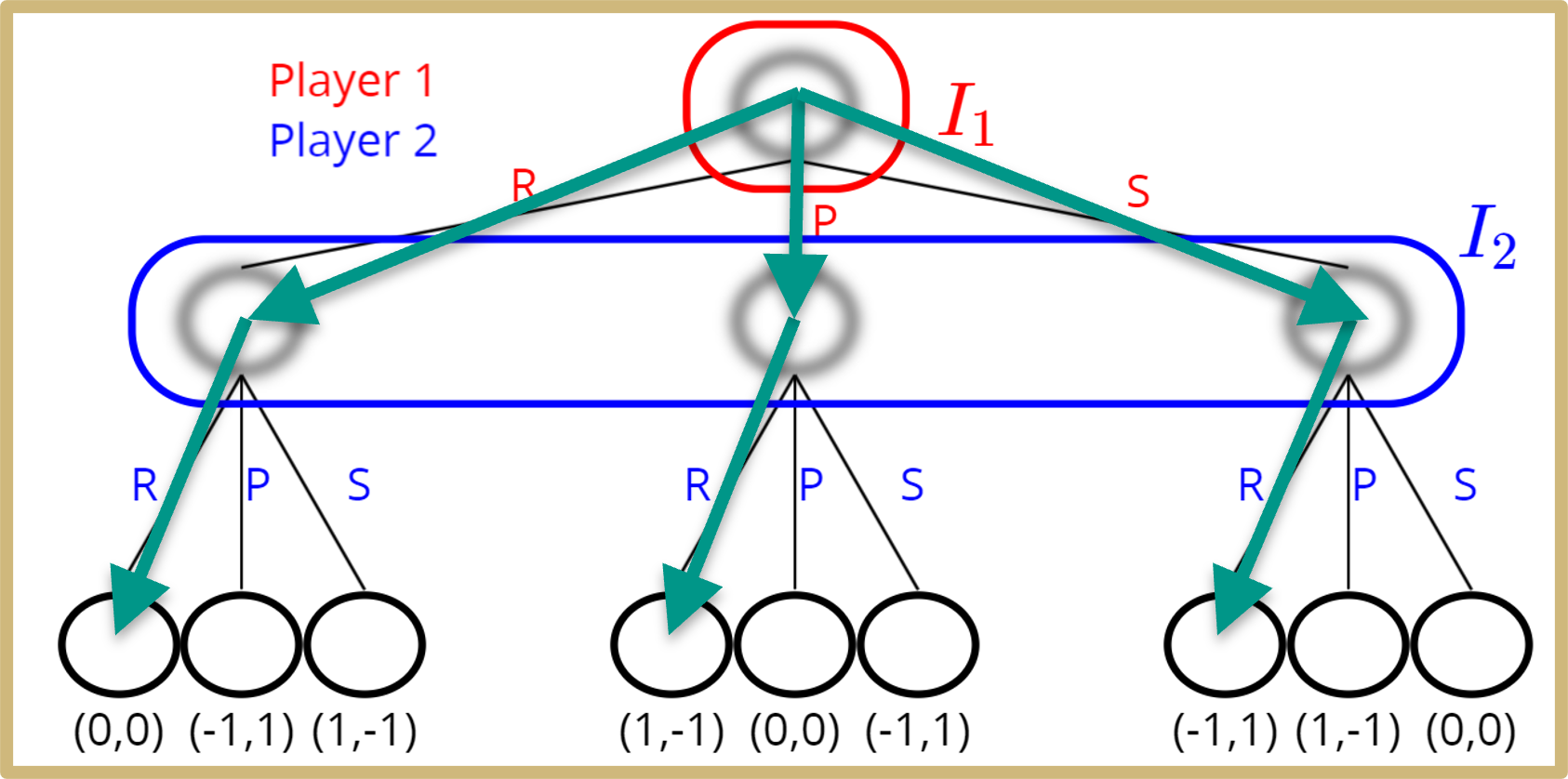

Extensive Form Games

- Reasoning about action histories

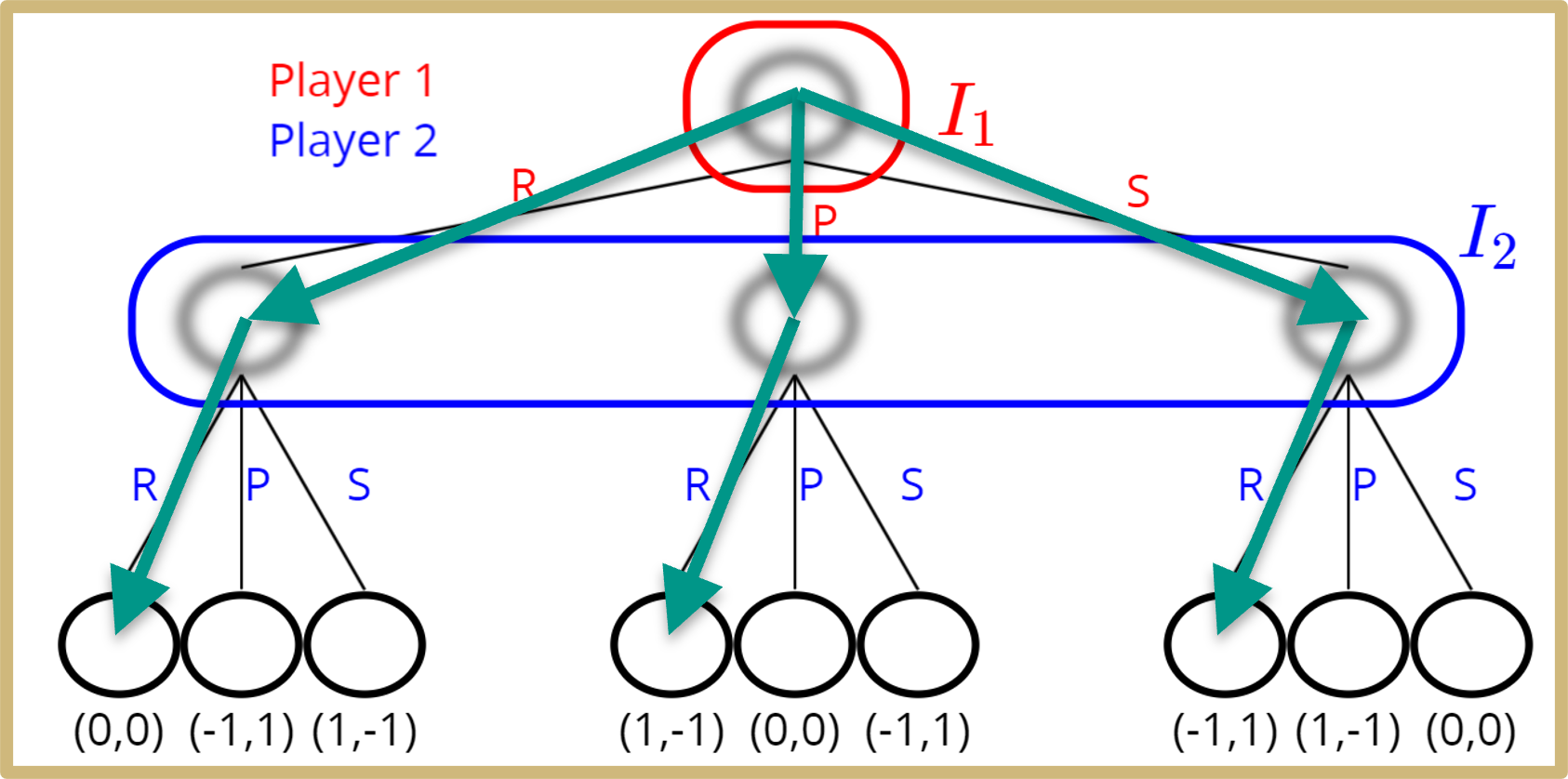

Imperfect Information Extensive Form Games

- Reasoning about information sets

R

P

S

R

P

S

R

P

S

R

P

S

Player 1

Player 2

(0,0)

(0,0)

(0,0)

(-1,1)

(-1,1)

(-1,1)

(1,-1)

(1,-1)

(1,-1)

Counterfactual Regret Minimization

Counterfactual Regret Minimization

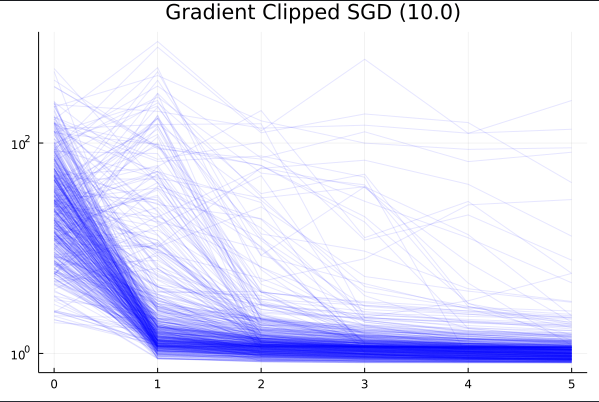

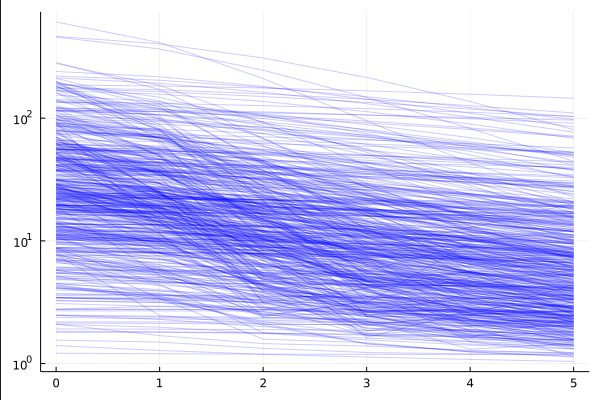

Vanilla CFR

(ES)MCCFR

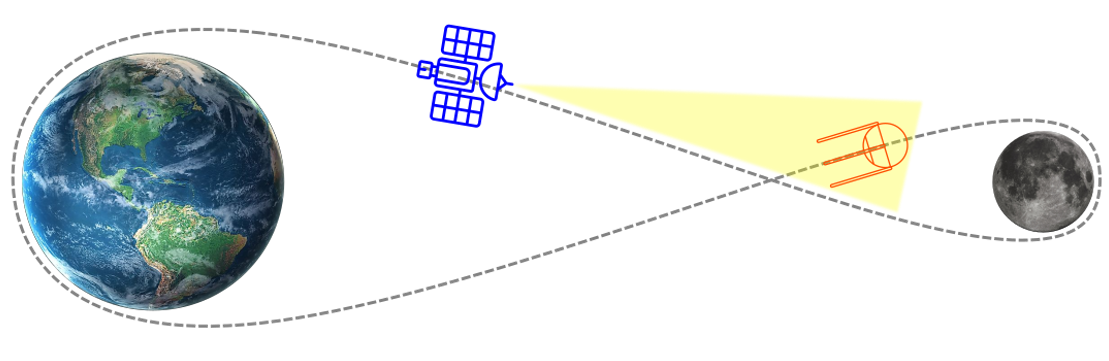

Exhaustive Tree Search

- Search time exponential in search horizon

- Long convergence time for long planning horizon problems

Sparse Tree Search

- Faster search/convergence time

- Allows sampling from opponent strategy to find maximally exploitative counter-strategy (data-driven)

1

2

...

...

...

...

...

...

...

\(N\)

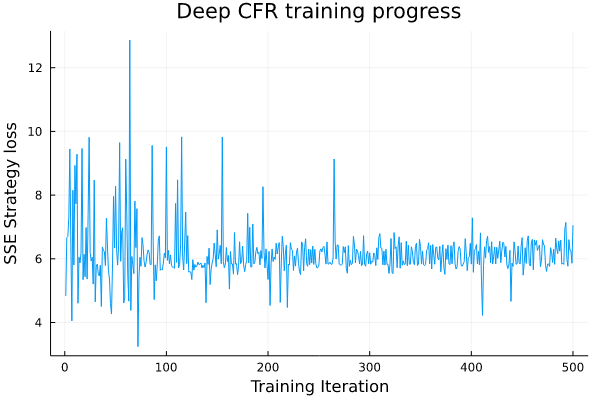

Deep CFR

Deep CFR

ADAM (0.01)

CFR Research Update

By Tyler Becker

CFR Research Update

- 291