Stochastic Dynamic Games in Belief Space

Wilko Schwarting, Alyssa Pierson, Sertac Karaman, Daniella Rus

LQR

iLQR

iterative LQ Games

iLQG

Belief Space iLQG

Belief Space Dynamic Games

Preliminaries

\(N\) agents \(\{1,\dots,N\}\)

Joint State: \(x_k \in R^{n_{x}}\)

Joint Action: \(u_k \in R^{n_{u}}\)

Joint Measurement: \(z_k \in R^{n_{z}}\)

Belief Dynamics

analogous to

Belief Update

Analytical Bayes filter solution intractable

Resort to EKF

Belief Update

Their Notation

Our Notation

Belief Update

\(\xi_k\) accounts for both measurement and transition noise

Vectorize belief:

POMDP Best Response Game

Expected Return for agent \(i\)

\(c_l\) - cost at final time-step (terminal cost)

\(c_k\) - cost for any intermediary time step

((\(\pi^i\) is a function of \(\pi^{\neg i}\)))

Iterative Dynamic Programming

Necessary condition of local Nash Equilibrium:

Optimize over perturbations

Quadratic Value Approximation

Nominal

Feed-forward

Feedback

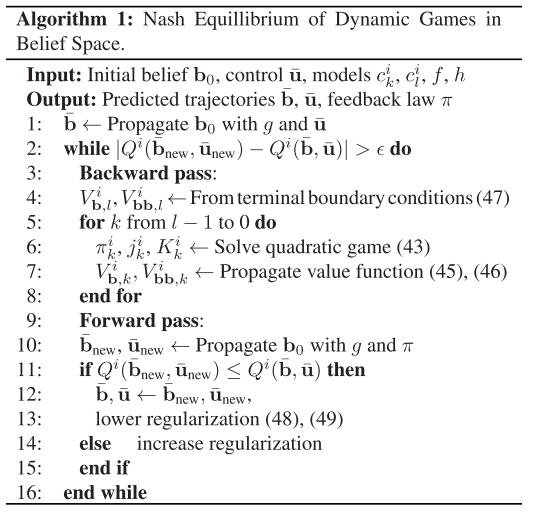

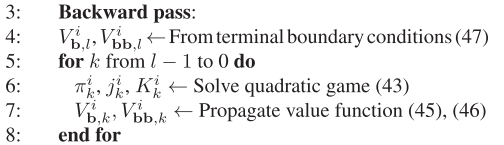

Backward Pass

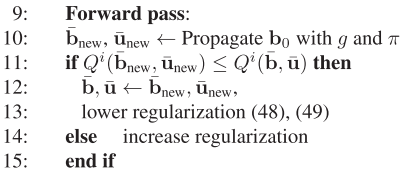

Forward Pass

Control Regularization

Belief Regularization

Experimental Results

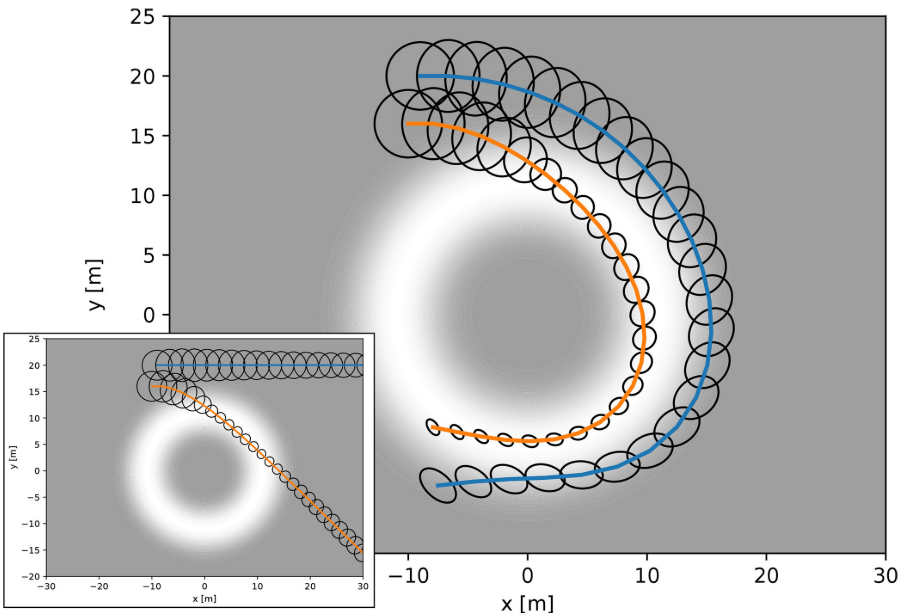

Active Surveillance

Agent 1 - observe agent 2

Agent 2 - maintain constant speed

Experimental Results

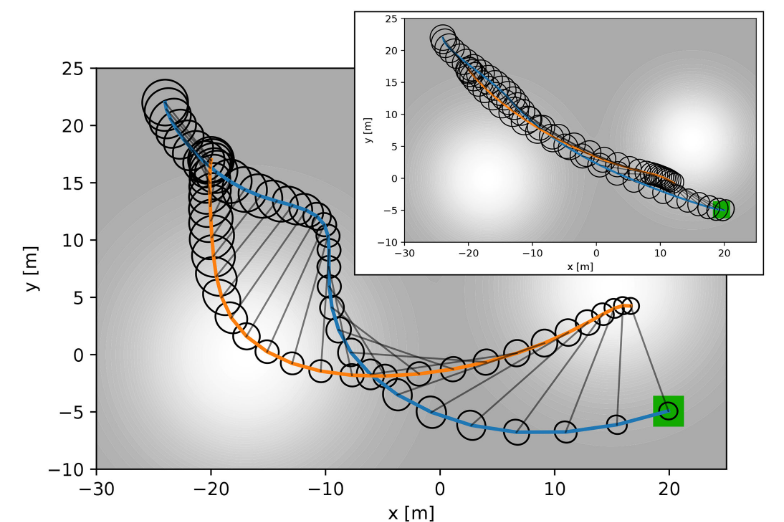

Guide Dog for Blind Agent

Agent 1 - Guide agent 2 to goal with low unceratainty

Agent 2 - No navigational control

Experimental Results

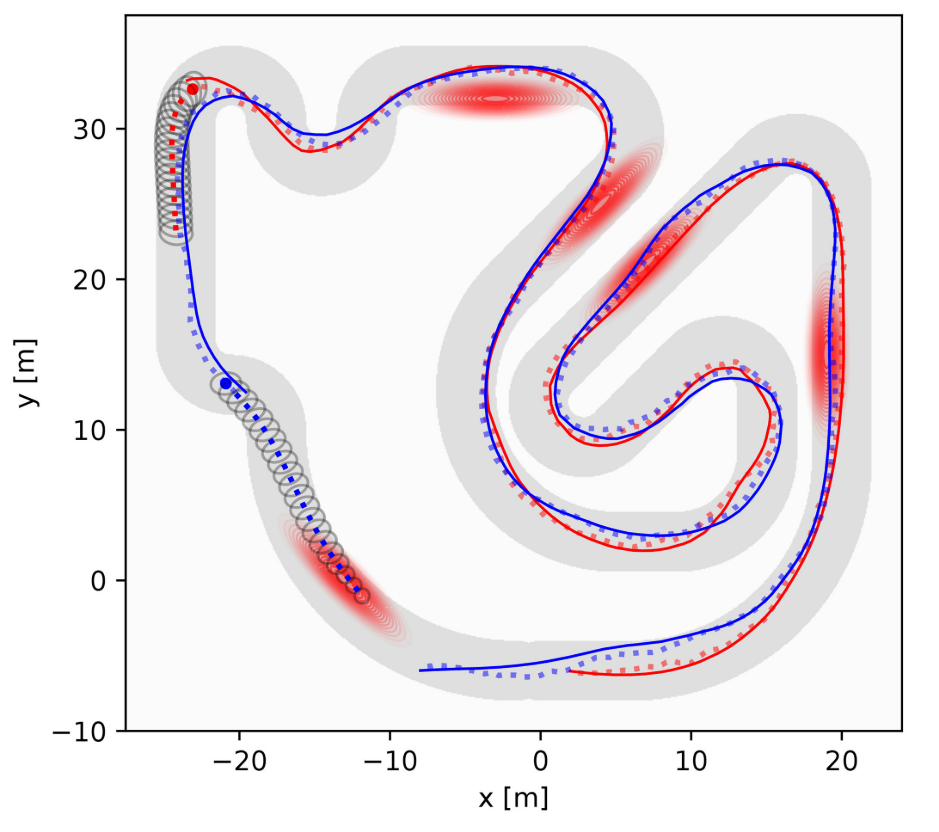

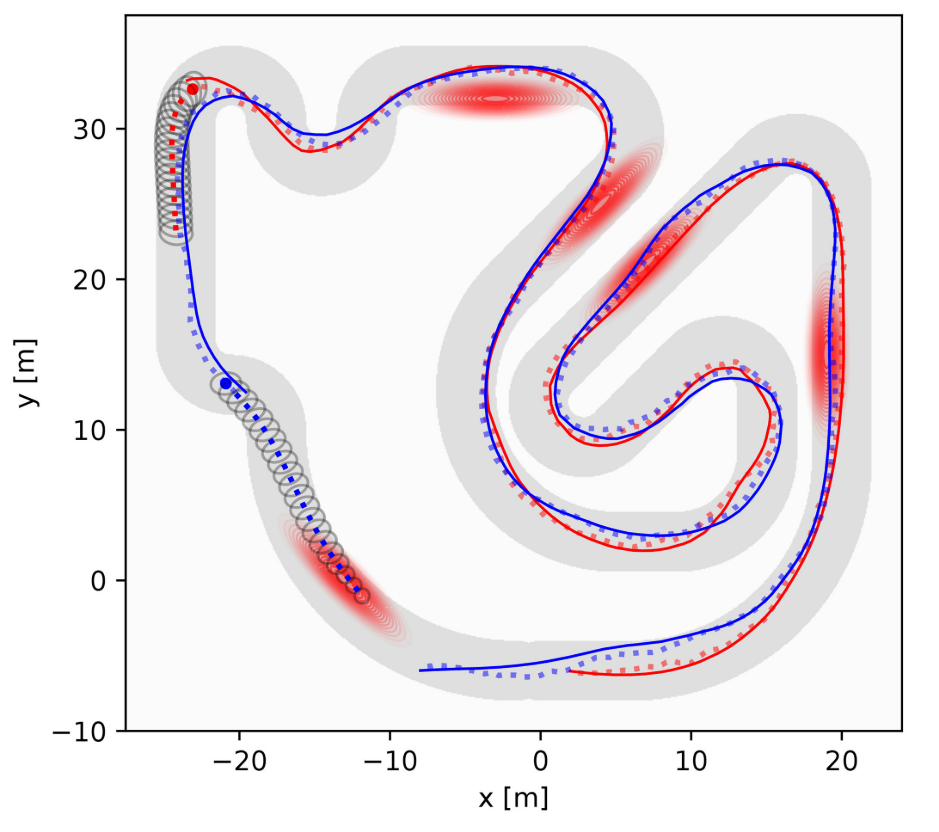

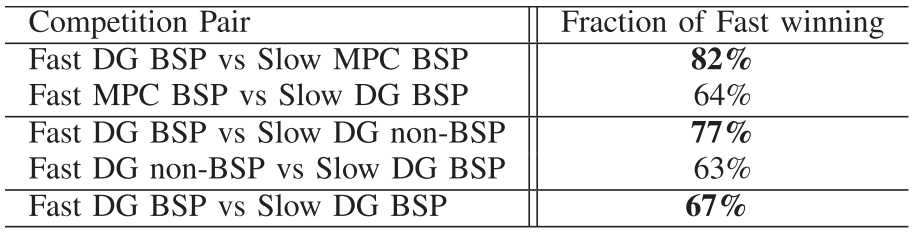

Competitive Racing

Agent 1 - Faster than agent 2 but starts behind agent 2

Agent 2 - Slower than agent 1 but starts ahead

Stochastic Dynamic Games in Belief Space

By Tyler Becker

Stochastic Dynamic Games in Belief Space

- 323